Quality Standards

AST Goals

Continuing in our series of performance and goal comparisons, we evaluate the quality requirements and method performance of aspartate aminotransferase (AST).

Aspatarte Aminotransferase (AST) Quality: What's the right Goal? What's the actual performance?

Sten Westgard

FEBRUARY 2014

In 2013, we took a look at a number of electrolyte and basic chemistry analytes, like chloride, calcium, sodium, potassium, and albumin and total protein to make assessments of which goals were achievable, and which methods were performing well. This month we're turning to a different analyte: Aspartate Aminotransferase (AST).

What's the Right Goal for AST?

On a more specific level, even for laboratories that are attempting to assess and assure quality, the question of goals, targets and requirements are a challenge. The main question for labs is, "What is the Right Goal for this Test?" While the 1999 Stockholm hierarchy identifies an approach, the ideal quality goals - goals developed based on the evidence of how the test results are actually used by clinicians - are rare indeed. Even for well-studied assays that have been used in medicine for decades, there is still a wide disparity on the actual goal that should be used for quality.

| Analyte | Acceptance criteria / quality requirements | ||||

| CLIA | Desirable Biologic Goal |

RCPA | Rilibak |

Spanish |

|

| AST | ± 20% | ± 16.69% | ± 5 U/L ≤ 60 U/L; ± 12% > 60 U/L; |

± 21.0% | ± 21% |

You can probably see what we chose this particular analyte: it's one of the few cases where the Desirable Biologic "Ricos goal" is not the smallest quality requirement of the choices. We see that CLIA, Rilibak, and the Spanish Minimum Consensus goals are all very similar, between 20% and 21%. The RCPA takes the prize as having the tightest quality requirement.

Where can we find data on method performance?

Here is our standard caveat for data comparisons: it's really hard to get a good cross-instrument comparison study. While every lab has data on their own performance, getting data about other instruments is tricky. If we want true apples-to-apples comparisons of performance, moreover, we need to find a study that evaluates different instruments within the same laboratory under similar conditions. Frankly, those studies are few and far between. Not many labs have the time or the space to conduct head-to-head studies of different methods and analyzers. I've seen a lot of studies, but few gold-standard comparisons.

Proficiency Testing surveys and External Quality Assessment Schemes can provide us with a broad view, but the data from those reports is pooled together. Thus, we don't get individual laboratory standard deviations, we get instead the all-group SD or perhaps the method-specific group SD. As individual laboratory SDs are usually smaller than group SDs, the utility of PT and EQA reports is limited. If we want a better idea of what an individual laboratory will achieve with a particular method, it would be good to work with data from studies of individual laboratories.

In the imperfect world we live in, we are more likely to find individual studies of instrument and method performance. A lab that evaluates one instrument and its methods, compares it to a "local reference method" (i.e. usually the instrument it's going to replace). While we can get Sigma-metrics out of such studies, their comparability is not quite as solid. It's more of a "green-apple-to-red-apple" comparison.

Given those limitations, here are a few studies of major analyzer performance that we've been able to find in recent years:

- Evaluation des performances analytiques du systeme Unicel DXC 600 (Beckman Coulter) et etude de la transferabilite des resultats avec l’Integra 800 (Roche diagnostics), A. Servonnet, H. Thefenne, A. Boukhira, P. Vest, C. Renard. Ann Biol Clin 2007: 65(5): 555-62

- Validation of methods performance for routine biochemistry analytes at Cobas 6000 series module c501, Vesna Supak Smolcic, Lidija Bilic-Zulle, elizabeta Fisic, Biochemia Medica 2011;21(2):182-190

- Analytical performance evaluation of the Cobas 6000 analyzer – special emphasis on trueness verification. Adriaan J. van Gammeren, Nelley van Gool, Monique JM de Groot, Christa M Cobbeart. Clin Chem Lab Med 2008;46(6):863-871.

- Analytical Performance Specifications: Relating Laboratory Performance to Quality Required for Intended Clinical Use. [cobas 8000 example evaluated] Daniel A. Dalenberg, Patricia G. Schryver, George G Klee. Clin Lab Med 33 (2013) 55-73.

- The importance of having a flexible scope ISO 15189 accreditation and quality specifications based on biological variation – the case of validation of the biochemistry analyzer Dimension Vista, Pilar Fernandez-Calle, Sandra Pelaz, Paloma Oliver, Maria Josa Alcaide, Ruben Gomez-Rioja, Antonion Buno, Jose Manuel Iturzaeta, Biochemia Medica 2013;23(1):83-9.

- External Evaluation of the Dimension Vista 1500 Intelligent Lab System, Arnaud Bruneel, Monique Dehoux, Anne Barnier, Anne Bouten, Journal of Clinical Laboratory Analysis 2012;23:384-397.

- Evaluation of the Vitros 5600 Integrated System in a Medical Laboratory, Baum H, Bauer I, Hartmann C et al, poster PDF provided at Ortho-Clinical Diagnostics website. Accessed December 10th, 2013.

- .Practical Applications of Sigma Metrics to Evaluate Assay Quality, J. Litten, J. Householder, Poster from 2013 AACC Annual Meeting, July 28-August 1.

These studies in turn can give us multiple data points (for instance, CV for high and low controls) for some instruments and we can attempt to evaluate these instruments' ability to achieve different quality requirements.

Can any instrument hit the Desirable Biologic TEa Goal for AST?

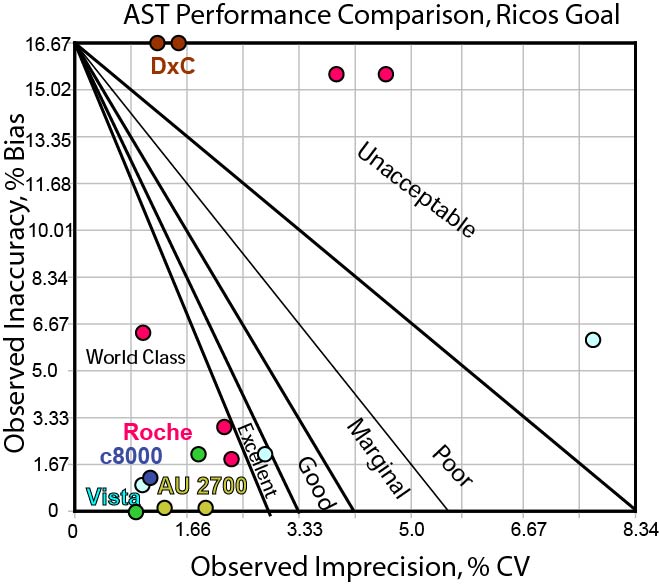

Here is a Method Decision Chart using the "Ricos Goal" of 16.69%.

[If you're not familiar with Method Decision Charts and Six Sigma, follow the links here to brush up on the subject.]

As you can see, this is a quality requirement that many methods can hit. Most assays are able to fit comfortably into the bull's-eye.

In any case, mostly all methods have similar performance. If 16.69% were our goal, we might conclude that we have many possible choices of vendor.

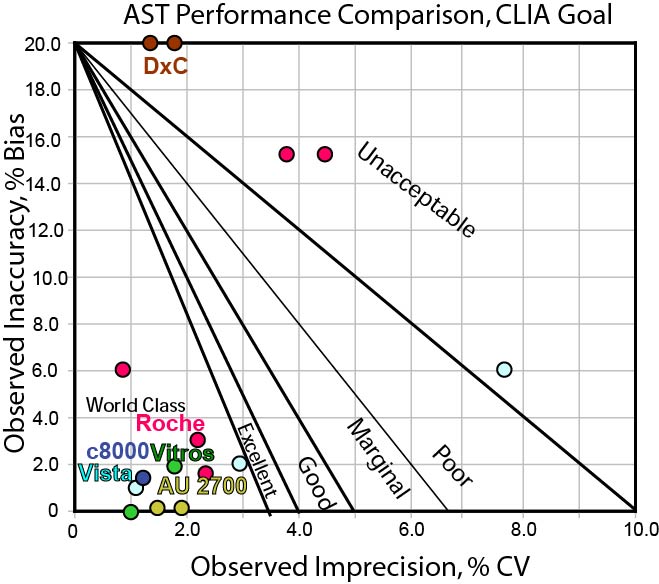

Can any instrument hit the CLIA TEa goal for AST?

This goal is wider, but basically the same set of methods are still hitting the bull's-eye. Those methods that couldn't hit the Ricos goal are still challenged to hit the CLIA goal.

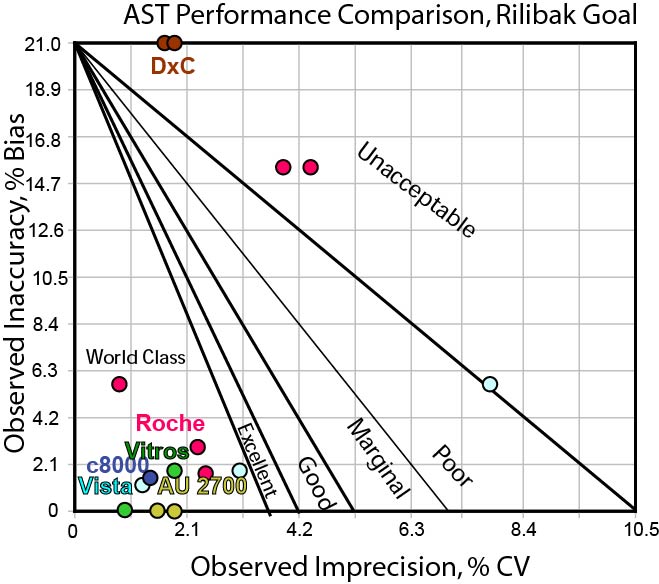

Can any instrument hit the Rilibak interlaboratory comparison goal for AST?

With the Rilibak goal practically the same as the CLIA goal, we don't see much of a difference in how methods are performing. Again, the method performance that couldn't hit the Ricos goal or the CLIA goal still can't hit the Rilibak goal

Conclusion

With quality requirements, there is no one source that is completely perfect and practical. Laboratories need to be careful when selecting quality goals, making sure that they choose the goals that they are mandated to meet (In the US, labs must be able to achieve CLIA targets), but also making sure that their goals are practical and in harmony with the actual clinical use of the test in their health system.

It appears here that all three goals have the ability to differentiate method performance. Or, perhaps put another way, the methods that have poor performance are obvious no matter what quality goal is used.

Remember, from our perspective, a goal that no one (no method) can hit is not useful, except perhaps to instrument researchers and design engineers (for them, this becomes an aspirational target, to design the next generation of methods to achieve that ultimate level of performance). Neither is it helpful to use a goal that every method can hit - that might only indicate we have lowered the bar too much, and actual clinical use could be tightened and improved to work on a finer scale. It appears that many of the manufacturers have world class methods (although a few are not doing well). So the use of a 16.69% goal can be practical, but in terms of differentiating performance of manufacturers, the goals of 20% and 21% are equal in their ability to separate the wheat from the chaff. Laboratories should confirm that the choice of any of these goals matches with the clinical expectations and use of the test results.

If you liked this article, you might also like

- What's the Right Goal?

- What's the Right Goal? An Example

- The Stockholm Consensus Hierarchy

- Can any method hit the Sodium target?

- Can any method hit the Potassium target?

- Can any method hit the Albumin target?

- Can any method hit the Chloride target?

- Can any method hit the Total Protein target?

- Can any method hit the ALT target?