Guest Essay

Approaches to Clinical Laboratory Utilization Art Eggert

Dr. Art Eggert discusses the evolution of clinical laboratory utilization. Do you remember back when laboratories used to be a profit center instead of a cost center? Dr. Eggert traces the history of laboratory testing and its costs, and discusses how to optimize laboratory testing benefits while minimizing the expense.

- Dollars please!

- Riding the tide!

- The root cause

- Mix and Match!

- Follow the liter (of IV fluid)

- Follow the yellow brick road!

- No time to say "hello" "goodbye"

- Doing what comes naturally!

- The game plan

- Biography: Art Eggert, Ph.D.

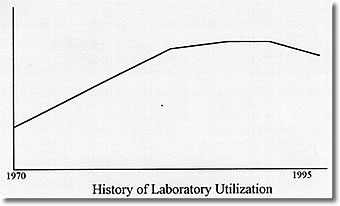

The overutilization of the Clinical Laboratory has become the recurring theme of editorials and professional presentations over the last several years. That overutilization is both actual and mythical, depending on what testing one is discussing, and is rooted in a past that is very different from what the present is and the future will be. In the past, more testing meant more revenue for the clinical laboratory and its parent institution. Laboratory charges could be manipulated to produce revenue to support numerous other programs in hospitals and clinics that had to be maintained as loss leaders or whose costs had to be hidden from the eyes of prying regulators or journalists. The laboratory was the "cash cow" of the medical establishment, and physicians were encouraged to "feed" that cow with all the test requests that they could generate so that it could be milked to the greatest extent. It was reasoned that since some information was good, more information was better, and unnecessary information could always be ignored. It was a seller’s market that would have made P. T. Barnum drool.

Dollars please!

The problem with the scheme was that someone had to pay the bill. Gradually, those bill payers found their costs of business going up and their clients unwilling to ante up the additional dollars to keep the system growing at its historical 15% per year. The results of this payer revolt are well known. Health Maintenance Organizations have proliferated and mushroomed. The federal government has tightened the reimbursement requirements for Medicare, and the states have followed suit with the support programs for the indigent that they operate. As cost containment has become the cry of the payers, the cash cow can no longer be milked for institutional profit and is, in fact, in grave danger of being sold for hamburger.

Riding the tide!

Before the tide of cost containment destroys the laboratory on the rocks of short-term financial savings, thus compromising the most useful tool that physicians have to diagnose and monitor their patients, it is time for reasonable people from among those who run the laboratories, pay the bills and prescribe the tests to develop ways that the laboratory can be used in a cost-effective and medically useful way. Those ways are the oft alluded to utilization strategy. That strategy must contain four fundamental elements, as will be developed in the following discussion.

The root cause:

Since the basis of all medical actions is the clinical diagnosis, outlining how the clinical laboratory participates in producing that diagnosis is fundamental to defining appropriate laboratory utilization. In this regard there are three important factors affecting cost-effectiveness: the cost of parallel laboratory testing, the cost of serial laboratory testing and the cost of not testing.

Parallel testing means ordering a number of tests at the same time so abnormalities in any of the tests can be found quickly and used in making the diagnosis. This is a good medical strategy to eliminate diseases from consideration, and it is relatively inexpensive if all the tests are potential sources of information and performed on the same analyzer. This, however, is rarely the case. A chemistry screening profile, for example, may all be run on one random-access analyzer, but usually it is composed of tests that are easy to analyze by related technology. Only a few tests of 20 may have any bearing on a diagnosis of gout or heart attack. Even when a significant portion of the cost is due to just placing the specimen on the one analyzer, each additional test is not free. All tests must meet quality control requirements and be followed up if they are outside some warning range, even if they have no bearing on what the care provider is trying to diagnose. Since laboratories are working more and more to devote their scarce manpower to reviewing exceptions rather than normal results, unnecessary tests mean unnecessary personnel time spend and additional testing cost. When this is done to provide "hollow information," that is, information which will not be used in patient care, then it is an unneeded medical care cost. Moreover, sometimes parallel testing is not done on the same instrument, for example, a hepatitis panel. Since the tests are not all independent of each other, doing all of them regardless of whether they have already been made meaningless by other tests will, at minimum, cause extra specimens to be added to the runs at some of the workstations and may even cause extra runs to be made at substantial costs. Collectively, more than half of the testing done in the parallel mode is wasted, and its omission would not degrade patient care in the slightest.

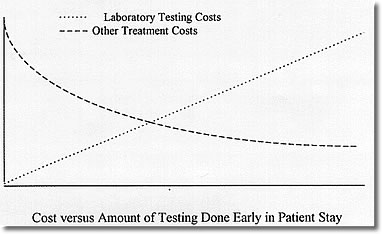

The opposite of parallel testing is serial testing. This means that tests are done one after another, each predicated on the result of a previous test or tests. While such testing yields maximum efficiency in the use of the clinical laboratory, it also increases the cost of patient treatment because patients have to be hospitalized longer awaiting results or must make additional visits to a clinic. There is clearly a tradeoff between laboratory costs and length-of-stay costs based on how much testing is done in parallel and how much is done serially.

Finally, there is the cost of doing no testing. The operative question here is "Is there any difference in the course of treatment that will be followed based on whether the test is normal or abnormal?" If the answer is "no," then the test is being done to "treat the doctor" rather than the patient. Such testing is clearly not justified.

Mix and match!

The greatest improvements in the utilization for diagnosis will be obtained by developing diagnosis testing patterns in which a limited number of screening tests are followed automatically by additional laboratory testing if they are positive for a disease of interest. This will require that physicians indicate to the laboratory what they suspect and that the laboratory respond with the appropriate testing to confirm or rule out the particular diagnoses.

Follow the liter (of IV fluid):

Once a diagnosis has been made, the process of the disease and the treatment needs to be carefully monitored, often by laboratory testing. Historically, this monitoring has been an art that each physician has independently practiced apart from any practice standards. Moreover, lawsuits have encouraged excess monitoring tests to guarantee that any abnormality will be documented and responded to. Unfortunately, both multiple tests which track the same body response and more frequent testing than required have both been common. Worse yet, some monitoring tests serve no useful purpose at all, but were done for "completeness" of the medical record.

Follow the yellow brick road!

It is apparent that a new method of patient management after diagnosis is imperative to reduce unnecessary testing of the type indicated. The increasing adoption of treatment protocols or clinical pathways are an important step to such utilization control through standardization. Treatment pathways are designed to manage a minimum of 80% of the patients with a particular diagnosis. Those who fall outside the pathway are generally those with additional complications or multiple diseases. Establishing the appropriate group of tests for a pathway can therefore define medical practice and minimize inappropriate test ordering. This mechanism can be further enhanced by reviewing the charts of patients immediately after discharge to determine whether the ordering by the physicians was appropriate for the diagnosis.

No time to say "hello" "goodbye."

While turnaround time was implicit in the discussion of serial versus parallel testing above, turnaround time cannot be reduced enough to limit utilization simply by improving the speed of the analyzers and the processes around them, although that can help. More critical is the development of a disease model for each patient to which the laboratory work is added as it is obtained. That model, of course, should be contained in the medical record, which needs to be computerized. With an up-to-date active problem list, as well as a list of the current medications and a records of the vital signs and symptoms, the needed laboratory work could be deduced, ordered, collected and performed in a much more timely fashion. In fact, a recommended sequence of tests for several days could be proposed for general approval to the patient care provider to facilitate patient management, with changes and overrides possible by the physician or by the computer as the patient condition changes. While such a general medical management model may still be a decade off, a more limited model might be put in place for laboratory-intensive pathways that are peculiar to a particular medical facility. The effort to develop such model should produce big dividends in reduced test requests and in reduced stats.

Doing what comes naturally!

Cost containment invariably means that a laboratory must do what it does best or what it must do to meet service demands and must outsource other procedures. Cost/benefit analysis here is very important. It is also important to reduce the number of specimens outsourced for expensive procedures. To contain outsourcing cost, one must develop a utilization control strategy that discourages unnecessary sendouts and channels necessary sendouts to the least expensive procedures at the most cost-effective vendors. The first can be accomplished by establishing a threshold for approval that must be met to have outside testing approved. The second can be accomplished by developing a series of ordering sets that lead the physician to use outside testing appropriately. Finally, preferred reference laboratories should be mandated to reduce cost and quality problems for desired service.

The game plan:

Successful utilization control therefore has four related components that must be put in place:

1) Testing strategies that strike a balance between parallel and serial testing in the diagnosis phase to minimize total costs, that is, laboratory plus length of stay costs.

2) Well-structured pathways that use the laboratory to provide no more costly information than needed to assure sound treatment management.

3) Automated patient models that help patient care physicians and laboratorians determine what is the best way to monitor a patients progress, and

4) An outsourcing strategy that eliminates providing costly services internally while controlling the costs of tests sent to reference laboratories.

To implement such comprehensive utilization strategy requires moving beyond the clinical laboratory’s traditional role which was to provide whatever was requested as quickly and accurately as possible. The new strategy requires a series of proactive steps. The first step is to establish a steering team that works to identify opportunities in the form of utilization problems that need to be solved. Step two is to develop approaches to address the problems as they are identified. One type of solution will not work for all the problems. For example, a committee may be needed to establish a laboratory utilization policy. Individual laboratorians may have to work with clinicians to establish treatment pathways. Actions teams may be needed to develop sets of diagnostic orders. Clinical pathology residents may need to review outside orders. The third step is to develop methods to measure the effectiveness of the utilization control effort. The final step is not to view anything that has been done as the final step. The laboratory environment will forever be a challenge as the health care world moves continually into uncharted territory.

Biography: Arthur Eggert

Arthur Eggert is a Professor in the Department of Pathology and Laboratory Medicine at the University of Wisconsin-Madison. He has a PhD in analytical chemistry from the University of Wisconsin and a strong background in computer science. In 1972 he became director of the data processing section of the clinical laboratories at the University of Wisconsin Hospital. He built the specimen control section and the phlebotomy program in addition to the data processing group. He was the project leader for the university-industrial effect to write the RelationaLABCOM laboratory information system. He developed the CHEMPROF computer program to teach problem solving to general chemistry students and wrote a textbook on clinical laboratory instrumentation, a course he taught for 13 years. He has led the re-engineering project in the clinical laboratory for the past 4 years and has been the chief of operations of the clinical laboratories and chair of the clinical pathology executive committee for the last 17 months.