Patient Safety Concepts

The War on Error

We explore the nature of Error, its varieties and occurences in the lab, and consider the proposed changes in QC through the perspective of Swiss cheese. No. We're not kidding. Swiss cheese.

The War on Error: Complete Victory or a Long, Hard Slog?- Know your enemy: What's an error, after all?

- The defenses against error: Swiss cheese

- QC for the future? Declaring victory, risking defeat

- The optimistic, realistic goal: Chronic unease

- References

January 2006

At the 2005 QC for the Future conference, Dr. Joe Boone of the CDC provided a historical summary of over 40 years of QC regulations in the laboratory:

“There is clearly a hole in the QC world today. How big that hole is, whether it is growing, and whether it is already too big depends on your perspective.”[1]

Despite all the regulations, inspections, professional training, and ever more advanced technology, medical error persists and even, to many observers and accounts [2], proliferates. 2006 will herald fresh assaults on the problem, using the new weapons provided by risk management, patient safety principles and electronic medical records.

Is this the year we win the fight?

Know your Enemy: What's an Error, after all?

Defining an error seems like a simple task – we all know one when we see one, right? But often it's only after the fact that we can identify the error. Errors are obvious with hindsight, but they are frequently invisible to us while they are occurring. Sometimes we can't even conceive of them occurring in the future. In retrospect, errors look inevitable. But in foresight, they can look impossible.

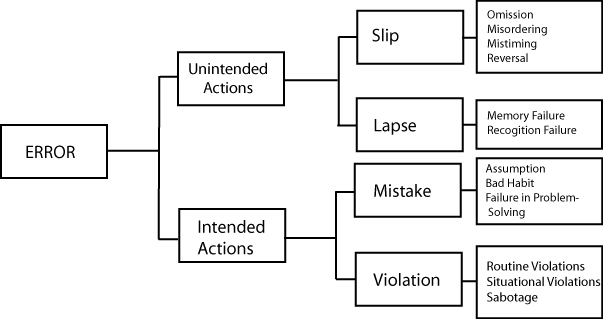

Fortunately for us, the literature of patient safety, safety culture, and human factors research has spent a great deal of effort defining just what an error is, as well as the different kinds of errors. Briefly here we present a short taxonomy of error, as well as examples of these kinds of errors in a laboratory context. For many of these examples, we are indebted to Valerie Ng's article in the October 2005 LabMedicine, “QC for the Future: Laboratory Issues – POCT and POL concerns”[3]

ERROR: The failure of planned actions to achieve their desired goal, where this occurs without some unforeseeable or chance intervention.[4]

The reason for a qualifier in this definition is to make an error distinct from bad luck. If you fail to get a test result from an instrument because you didn't add enough reagent, that's an error. If you fail to get a test result because a madman burst into the lab and took a sledgehammer to the instrument, that's bad luck (although possibly an error occurred at security.)

These are errors where the plan was good, but somehow the execution failed. In these situations, the errors were unintended.

|

Type of Error

|

Definition

|

Laboratory Example

|

Analytical Example

|

| Slip | Attention from a task is distracted - we fail to follow steps of the plan | Accidental switching of blood specimens[5] | We run controls, but fail to inspect the control results. |

| Lapse | We forget one or more of the steps of the plan. | “The particular test system used require the application of equal volumes of reagents A and B. Yet, a new vial of only 1 of the 2 reagents is often requested.”[3] | We run controls, plot and interpret results correctly, but fail to recognize an out-of-control result. |

| We fail to recognize a situation correctly, or fail to monitor it correctly. | “Reagent bottles containing clear solutions have been refilled with water. ('Looks like water, must be water')”[3] | We run controls, plot interpret results, recognize an out-of-control result, but then fail to trouble-shoot. |

It's quite easy to identify many of the slips, lapses, and monitor failures that occur in the laboratory. In the larger picture, we can also see monitor failures in the Maryland General scandal – nearly every level of management, in addition to inspectors, failed to note problems in the lab.

The second group of errors are mistakes. This is where we execute the plan, but the plan was not adequate to the goal. We can break mistakes down further into two types: rule-based mistakes and knowledge-based mistakes.

Rule-based mistakes:

|

Type of Error

|

Definition

|

Laboratory Example

|

Analytical Example

|

| Assumption | We apply a good rule or plan to the wrong situation | "Reagents from different kits/lots are used interchangeably in a well-intentioned attempt to save money."[3] | We use 2 sd cnotrol limits even though we know 5% or 1 in 20 values will exceed those limits and generate false rejections. But we assume we'll be able to tell which flags are "real" errors. |

| Habits | We apply a bad rule to the situation. (Something that "gets the job done") | “The particular test system used require the application of equal volumes of reagents A and B. Yet, a new vial of only 1 of the 2 reagents is often requested.”[3] | We use 2 SD control limits and repeat the controls until the values fall "in." |

The classic analytical "bad habit" is repeating controls until they are “in.” We can consider this a habit because often the technologists who have this habit don't even know this is a violation. They've never been educated that repeating a control is a bad rule to use in the situation. Complicating this bad rule is another bad rule: using 2s limits. Entire laboratories, hospitals and even manufacturers are still in the habit of implementing this bad rule, even though it is clear that the rule generates a lot of false alarms, which are gradually ignored, until all alarms are considered false.

Knowledge-based mistakes

Knowledge-based errors occur when we are presented with a novel situation or problem and we are forced to improvise a solution (i.e. there are no existing plans to deal with the problem). Here our errors are caused by our lack of knowledge of the system and failures we make in problem-solving.

|

Type of Error

|

Definition

|

Laboratory Example

|

Analytical Example

|

| Failures in problem-solving | We lack sufficient knowledge of the system to handle unexpected situations | “A common issues is the ward returning a [fingerstick glucose] meter for service because it 'is broken.' At least 90% of the time, the clinical laboratory determines that the meter is working perfectly fine.”[3] | We use 2 SD control limits and repeat the controls, repeat the controls, and finally get the values "in." But then we do nothing to understand why it took so many repeats to get the controls in. |

Trouble-shooting has become something of a lost art. Back in the "good old days," you could rely on a technologist who had some experience to “sniff out” the problems with a method. These days, labs are increasingly dependent on the technical support staff of the manufacturer.

These are actually a type of rule-based mistakes, but due to their serious nature we will consider them separately. These are cases where we intentionally avoid using a good rule in a situation.

|

Type of Error

|

Definition

|

Laboratory Example

|

Analytical Example

|

| Routine violations | We ignore rules to avoid “unnecessary” effort, or to complete a job quickly, or to get around what seems to be overly laborious actions | “A patient's result is unexpected [on a fingerstick glucose]. Instead of following the recommended procedure to verify the instrument is working properly (i.e. Rerun, quality control materials), a supervising nurse quickly performs a fingerstick glucose test on herself to show the other nurse that the instrument is performing correctly.”[3] | Releasing patient test results, when pressed by the doctor on the phone, even though the run is out-of-control (just by a little but, of course). |

| Situational violations |

When procedures and plans are so complex, we feel we can't complete the task if we stick to the plan. | “Many sites find [annual assessment of competency] to be an excessive burden, having to demonstrate the competency of each test person annually. The clinical laboratory has been asked to 'change the rules' to either reduce the frequency of competency, or ideally eliminate the requirement of regular compentency assessment altogether.”[3] | Not recalling the previous week's or month's patient test results when weekly or monthly EQC results are out-of-control. Not repeating the EQC validation protocol when the weekly or monthly EQC results are out-of-control. |

Routine violations often occur in cases where the principle of least effort points to a different set of actions that the documented plan. Finally, there is a category of violations known as thrill-seeking or optimizing violations. These violations are caused by thrill-seekers (breaking rules to appear tough, to avoid boredom, for excitement) as well as outright saboteurs. That is probably the least likely source of error, but one that nevertheless exists. And frankly, I'm hard pressed to find violations by thrill-seeking laboratory professionals. Perhaps the term “thrill-seeking laboratory professional” is simply an oxymoron.

Believe it or not, there are even deeper classifications for errors (like correct improvisations, misventions, and mispliances). Our purpose here is not to illuminate every flavor of error, however – we merely wish to delineate the major categories and confirm that they occur in the laboratory.

The important thing to realize here is the infinite variety of error. There are many ways to fail. And let us not forget, these failures accumulate, mingle, and multiply. Often an accident is not caused by a single failure, but by a combination of a violation and an error.

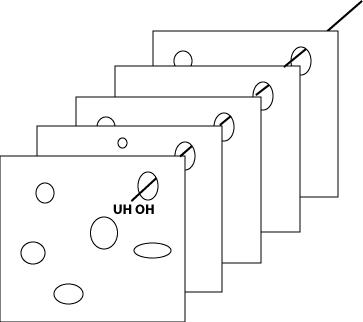

The Defenses against Error: Swiss Cheese

How do we defend ourselves against this seemingly limitless array of errors? Well, we marshal an equally vast number of defenses. We build physical defenses like better instrumentation, automation, built-in checks, safety devices, ergonomic construction to prevent certain actions, locks, alarms, protective equipment. We also rely on training, regulation, rules and procedures, professional education requirements, supervisors and oversight.

The idea is to combine all our physical and technical safe-guards with our people and procedures to provide “defenses in depth.” Ideally, these would provide a series of solid walls that prevent any dangers from breaching the system and becoming hazards. Unfortunately, as it turns out, these solid walls are much more like Swiss cheese.

It's always rewarding for those of us from Wisconsin to use a cheese metaphor in a scholarly discussion. Having long been dismissed as “cheeseheads”, we are gratified to demonstrate that cheese, after all, can teach us a valuable lesson about the world. The “Swiss Cheese” metaphor is a classic of the safety literature. It recognizes the fact that every defense has a number of holes in it (dynamically enlarging and shrinking), and that when these holes line up, an opportunity exists for a danger to penetrate the entire system and become a hazard.

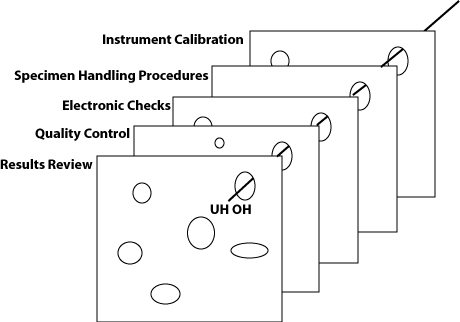

In the ideal lab, the instrumentation contains a huge number of engineering safeguards as well as electronic and software checks. The laboratory staff has professional education (MT, MLT, etc.), continuing education and training, competency assessment, as well as a robust set of procedures and rules recorded in the lab manual. Quality control, calibration, proficiency testing, peer group analysis are more defenses against hazards. Supervisors, managers, regulators, and inspectors help test, reinforce and augment these defenses.

Using this metaphor, we can see that each component makes a contribution, but none is sufficient by itself. An even more realistic picture would note that some layers have more holes than others (i.e. some safeguards are more like Lacy Swiss), and that some layers only appear at certain times (for instance, regulatory inspections may only serve as a defense immediately around the time of the inspections, and weeks and months afterward, the inspection results are less reflective of the real state of the lab).

QC for the Future? Declaring Victory, Risking Retreat?

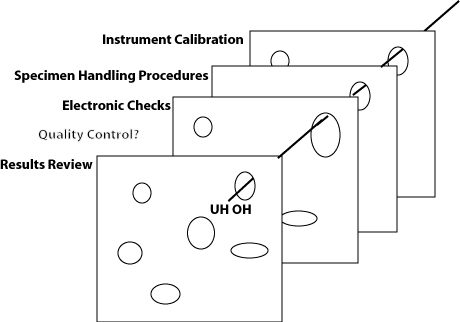

What will QC look like in the Future? Will there be more or fewer slices of Swiss cheese? Thicker or thinner? With smaller or bigger holes?

The true absurdity of the EQC debate is revealed when considered from the “Swiss cheese” perspective. Under the EQC thinking, you only need the extra piece of “QC cheese” once a week or once a month (those hazards that sneak through on other days don't matter). And the EQC thinking asserts that an electronic simulation of one of the layers of the defense is just as good as the real thing. Risk management principles aren't going to change those facts, but they may provide a rationalization for many laboratories to perform less QC.

The latest proposals for Risk Management based QC are not yet concrete. We can speculate, but it is unclear what Risk Managed QC will entail. What does seem certain is that the manufacturer will go through a Risk assessment and mitigation cycle, and “if hazards cannot be avoided or prevented, the user must be provided with the information to understand and address residual risks.”[6]

The current descriptions of risk management detail an assessment process which includes preliminary hazard analyses where “[w]hen failure data is not available, perhaps when a new assay, technology or application is being evaluated, expert judgment is relied upon to rank the likelihood on a qualitative scale, using terms such as frequent, occasional, rare, and theoretical. Since the purpose of a preliminary hazard analysis is to identify the risks early so they can be systematically eliminated during the design phase of a product or process, or at least reduced to an acceptable level, knowing the relative risk is good enough.” [6] Already, these relative risk estimates are being described optimistically: “It is reasonable to assume that improbable results would be challenged and implausible results disregarded by physicians.”[6]

In a previous essay, we noted the “Risks of Risk Management.” Relevant to this lesson is the risk that manufacturer experts find it in their self-interest to underestimate the risks of their instruments - by assuming the severity of any erroneous test result is small, by assuming that any erroneous test result will be detected by the physician (it has been noted that this is not true[7]), or by assuming that erroneous test results occur only very rarely.

Ultimately, these residual risks will be passed on to the laboratory, and it becomes the laboratory manager's responsibility to accept the risk, mitigate the risk, or reject the method entirely. “The take home message is that it is more important for the laboratory director to ensure that all of the Quality Systems are working well and producing accurate test results than it is to debate over which or any of the EQC options to select.”[8]

Here, unfortunately, is a Devil's gift to the laboratory manager. The laboratory manager has the freedom of the marketplace, and the legal responsibility of the regulations, to choose accurate systems for the hospital. But in a marketplace where many or most instruments may not satisfy these goals, where manufacturers frequently do not provide the necessary data to evaluate their methods, and an environment where the use of POC devices is often demanded by physicians regardless of the quality performance, the laboratory manager has few choices and little opportunity. He or she may feel forced to select an imperfect instrument.

Call this the "Laboratory-Director-ization" of the War on Error. The regulators haven't been successful, so they're passing the buck. The manufacturers seem unwilling to embrace this task of providing better QC systems, so they're passing the buck. The laboratory director, already burdened by cost-cutting pressures from management above, and staffing and competency deficiencies from below, is now responsible for all of the instrument quality problems, too. The regulators and manufacturers can retreat back to their institutions, confident that it's no longer their problem.

The Optimistic, Realistic Goal: Chronic Unease

The price of quality, like liberty, is eternal vigilance. While comforting, the idea that we can conquer errors with feats of engineering, or a new paradigm of thinking, or a new way of regulation, is an illusion. New technology and techniques can reduce some errors, but they cannot completely eliminate them. As long as humans perform the processes, design the instrumentation to perform those processes, design the automation of those processes, or monitor those processes, errors will occur. If we forget to be afraid of this reality, we open the system up to greater failure.

The ideal state of an organization committed to patient safety is not peaceful utopia, but a state of persistent worry. This has been called “chronic unease” and it reflects the organization's belief that despite all efforts, errors will occur in the future, some of them in unforeseen, unimaginable ways, and that even minor problems can quickly escalate into system-threatening failures. In addition to all the formal defenses against these threats, the key asset is a group of thinking professionals. Even if to err is human, to catch that error is also ultimately a task requiring humans again:

“The people in these organizations know almost everything technical about what they are doing - and fear being lulled into supposing that they have prepared for any contingency. Yet even a minute failure of intelligence, a bit of uncertainty, can trigger disaster. They are driven to use a proactive, preventative decision making strategy. Analysis and search come before as well as after errors. They try to be synoptic while knowing that they can never fully achieve it. In the attempt to avoid the pitfalls in this struggle, decision making patterns appear to support apparently contradictory production-enhancing and error-reduction strategies.”[9]

If we are fighting a War on Error, we need to assure that the frontline soldiers in this fight – the medical technologists, department supervisors, laboratory managers – are equipped with the professional training they need. They need the home front support of a committed management team. They need the right tools to do the job from dedicated manufacturers. And they need useful, actionable intelligence from the academic and scientific community. We're all a part of this struggle.

Let's fight for that world.

References

- D. Joe Boone, PhD, “A History of the QC Gap in the Clinical Laboratory” LabMedicine, October 2005, Vol 36, Number 10. pp.613.

- The oft-referenced 1999 IOM report, “To Err is Human”, stands out among those reports.

- Valerie L. Ng, PhD, Ng. "QC for the Future: Laboratory Issues - POCT and POL concerns", LabMedicine, October 2005, Vol 36, Number 10. pp.621-625.

- James Reason and Alan Hobbs, Managing Maintenance Error, Ashgate Publishing, Burlington VT, 2003, p.39.

- Walter F. Roche, Jr. "Lab Mistakes Threaten Credibility, Spur Lawsuits", Los Angeles Times, December 2, 2005.

- Donald M. Powers, PhD, "Laboratory Quality Requirements Should be Based on Risk Management Principles", LabMedicine, October 2005, Vol 36, Number 10. pp.633-638.

- HMJ Goldschmidt, RS Lent, "From data to information: How to define the context?" Chemometrics and Intell Lab Sys. 1995;28:181-192.

- Judy Yost, “CLIA and Equivalent Quality Control: Options for the future”, LabMedicine October 2005, 36:19:p.616.

- T.R. LaPorte and P.M. Consolini, “Working in practice but not in theory: theoretical challenges of 'high-reliability' organizations”, Journal of Public Administration Research and Theory, 1. 1991, p.27, as quoted in Reason, James, Managing the Risks of Organizational Accidents, Ashgate, Burlington VT, 1997.