Quality Requirements and Standards

HbA1c in 2010, Part X

The next-to-last installment of an extended discussion of HbA1c methods and the analytical quality necessary for patient care. While we may have found problems with many HbA1c methods at the point of care, are methods any better in the central laboratory?

James O. Westgard, PhD

March 2010

This exercise is the conclusion of the RWL 2010 example of method validation studies for HbA1c.

- Episode 1 identified an abstract from the 2009 AACC national meeting [1], a validation study published in the January 2010 issue of Clinical Chemistry [2], and an accompanying editorial in that same issue [3].

- Episode 2 discussed the information available in the abstract and concluded that any decision on acceptable performance depended on knowing the quality needed for the clinical use of a HbA1c test.

- Episode 3 reviewed the recommendations for quality requirements, as discussed by Bruns and Boyd in their editorial [3] that accompanied the publication of the evaluation study by Lenters-Westra and Slingerland [2] which was discussed in

- Episode 4 discessed the study by Lenters-Westra and Slingerland.

- Episode 5, Dr. Craig Foreback provided a discussion of measurement principles to help us understand both the NGSP certification protocol and the use of 3 different secondary reference methods in the Lenters-Westra and Slingerland study.

- Episode 6 provided a more detailed discussion of the statistical web analysis of the replication and comparison of methods experimental results.

- Episode 7 made use of error grids to compare different quality goals and requirements that might be applied to determine the acceptability of a HbA1c method.

- Episode 8 discussed how to prepare a Method Decision Chart to help judge the acceptability of performance.

- Episode 9, described a "mixed units" Method Decision chart that could be used to summarize all the performance results on these POC devices and showed that only the best precision and lowest bias provided the quality necessary for reliable patient testing.

In this, the final episode, we take a quick look at the performance of other laboratory testing methods, as available from the latest CAP PT survey.

Real World Performance

Perhaps the best measure of the quality that is being achieved in the real world can be found in the CAP proficiency testing data. Great care has been taken to prepare whole blood samples that represent the real specimens and the concentrations of the pools have been carefully targeted to test the critical analytical range. For example, the most recent CAP survey results that have been posted on the NGSP website (as I am writing this 2/24/2010) are “updated 12/09” and represent the 2009 GH2-B fresh pooled samples with NGSP certified values of 6.6 %Hb, 7.4 %Hb, and 9.5 %Hb.

You can download these results from www.ngsp.org. Click on “Survey Grading” on the homepage, then select from the menu on the left side “CAP GH2 Data UPDATED 12/09”, which will take you to the page where you can select the latest survey results, in this case “CAP GH-2b 2009 Summary.” You should have a copy of that in your hands as you proceed in reading this discussion.

Review of CAP 2009 GH2-B survey results

Sample GH2-04 with an NGSP reference value of 6.6 %Hb is particularly relevant to our discussions since we have focused on the ADA diagnostic criterion of 6.5 %Hb. Note that sample GH2-06 would also be very useful since it’s concentration is 7.4 %Hb and very applicable for performance in monitoring the patient treatment goal of 7.0 %Hb. However, we’re going to focus on the 6.6 %Hb sample here.

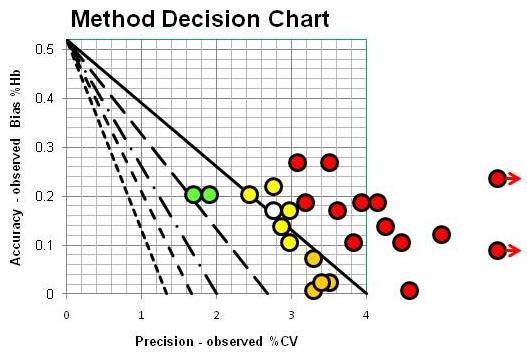

The survey includes 26 different method subgroups and identifies the number of laboratories in each subgroup, which range from as few as 19 to as many as 349 in the total of some 2600 laboratories participating in this survey. For each survey sample, the results are summarized by presenting the mean, bias, and %CV for each method subgroup. By plotting operating points that represent the absolute bias as the y-coordinate and the %CV as the x-coordinate, we can use the Method Decision Chart with mixed units, just like we did for the POC devices in Episode 9. The CAP survey results for all these method subgroups can then be displayed graphically, as shown below:

NOT a pretty picture, right, even though colorful! The green operating points represent 2 method subgroups that achieve CVs of 2.0% or better and the yellow ones CVs of 3.0% or better. The orange and red operating points are method subgroups having CVs above 3.0%, which include 17 of the 25 subgroups [one method subgroup was omitted because of known interference by EDTA]. The 4 method subgroups shown by gold operating points represent borderline precision between 3% and 4% CVs and small biases, less than 0.1 %Hb.

Performance doesn’t look much better than observed for the POC devices, does it? Yes, the biases are smaller, and more method subgroups cluster between 2 and 3-sigma performance, but that’s still not very good! I know you’re going to argue this is an unfair comparison because each of these operating points represents many different laboratories, not just the performance in one laboratory. True, but the “white” point represents the DCA Vantage in a group of 97 different laboratories. Many of our highly automated high production methods are not as consistent from lab to lab as the DCA Vantage POC device!

What’s the point?

Comparability of test results is important for patient care today because patients don’t stay within one healthcare organization or even one healthcare network. Lab-to-lab differences in test results are still significant, even with all the effort being expended to certify that all these methods give comparable results. The problem with performance is not limited to POC devices! Our high production methods do not give results that are comparable from lab to lab, organization to organization, network to network, state-wide, or nation-wide.

The current danger is that the ADA guidelines to physicians for diagnosis and treatment of diabetics assume higher quality laboratory testing than is available in the real world. That’s the message from the paper by Lenters-Westra and Slingerland [2]. That’s the message from the editorial from Bruns and Boyd [3]. Hopefully, that message is even clearer when you understand the statistical results, the various requirements for quality, and how they come together in the Method Decision Chart to provide an alarming picture of quality in the real world!

References

- Cox J, Cowden E, Krauth G, Li J, Ledden D. A Comparison of the DCA Vantage Analyzer and Afinion AS100 Analyser Using HbA1c Reagent. (Abstract) Clin Chem 2009;55(No. 6 Supplement):A92.

- Lenters-Westra E, Slingerland RJ. Six of Eight Hemoglobin A1c Point-of-Care Instruments Do Not Meet the General Accepted Analytical Performance Criteria. Clin Chem 2010;56:44-52.

- Bruns DE, Boyd JC. Few Point-of-Care Hemoglobin A1c Assay Methods Meet Clinical Needs. Clin Chem 2010;56:4-6.

- Westgard JO. Basic Method Validation, 3rd ed. Madison WI:Westgard QC, 2008.

- Boyd JC, Bruns DE. Quality specifications for glucose meters: Assessment by simulation modeling of errors in insulin dose. Clin Chem 2001;47:209-214.

- Holmes EW, Erashin C, Augustine CJ, Charnogursky GA, Grysbac M, Murrel JV, McKenna KM, Nabhan F, Kahn SE. Analytical bias among certified methods for the measurement of Hemoglobin A1c. Am J Clin Pathol 2008;129:540-547.

- Bland JM, Alterman DD. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986;i:307-301.

- CLSI EP27-P. How to Construct and Interpret an Error Grid for Diagnostic Assays. Clinical and Laboratory Standards Institute. Wayne, PA. 2009.

- Clarke WL, Cox D, Gonder-Fredrick LA, et al. Evaluating clinical accuracy of systems for self-monitoring of blood glucose. Diabetes Care. 1987;10:622-628.

- Parkes JL, Slatin SL, Pardo S, Ginsberg BH. A new consensus error grid to evaluate the clinical significance of inaccuracies in the measurement of blood glucose. Diabetes Care 2000;23:1143-1148.

- Westgard JO. Six Sigma Quality Design & Control: Desirable precision and requisite QC for laboratory measurement processes. 2nd ed. Madison WI:Westgard QC, 2006.