Quality Standards

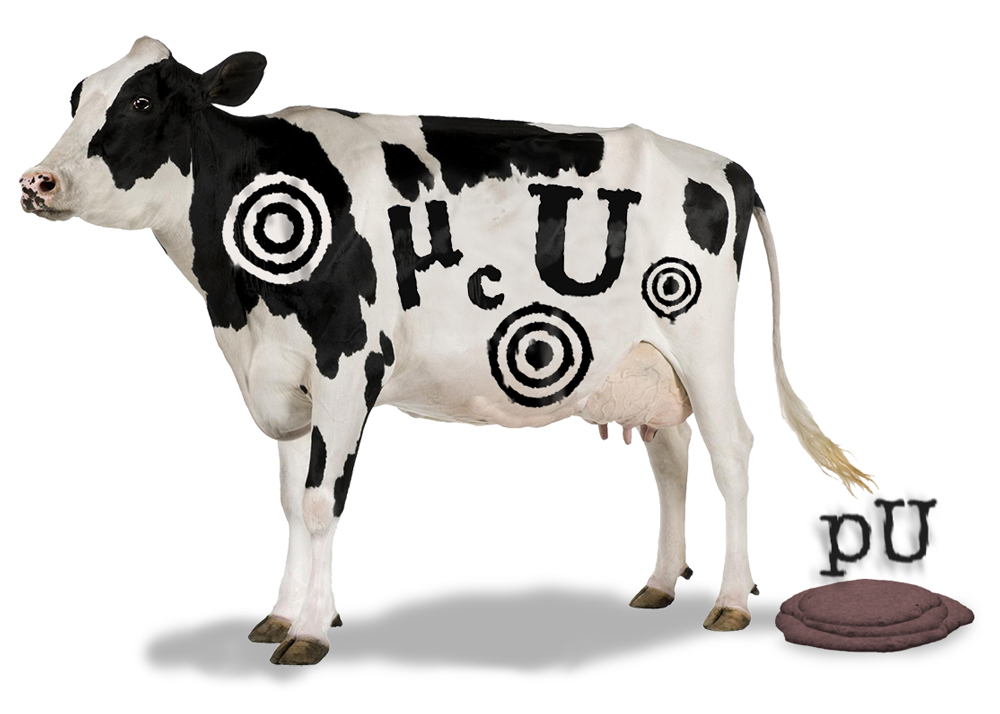

Six of Eight Assays on a Atellica CH930 cannot meet pU goals

A continuing investigation into assay capability to meet new performance specifications for permissible measurement uncertainty (pU). Can the Atellica CH 930 hit these targets?

5 of 8 assays from an Atellica CH 930 analyzer cannot meet 2021 pU% goals

Sten Westgard, MS

March 2022

- 6 of 8 assays of a Hitachi 7600 cannot meet pU goals.

- 8 of 9 assays of a Cobas c702 cannot meet pU goals.

- 6 of 8 assays of 2 ARCHITECT c16000s cannot meet pU goals.

- 6 of 7 assays of an AU 680 cannot meet pU goals

- MU pU goals, can anything hit them?

The 2021 CCLM article by Braga and Panteghini https://pubmed.ncbi.nlm.nih.gov/33725754/ remains a seminal publication: providing a defined set of performance specifications for measurement uncertainty. In an earlier paper, Braga and Panteghini noted that entire pU cannot be consumed by the laboratory alone, a sizeable portion must be alloted to the calibrator: "If the uncertainty of the value assigned to the commercial calibrator is within 50% of [pU], the individual laboratory has, in most cases, a sufficient part of the uncertainty budget to spend for the random sources." In other words, the laboratory only gets half of the pU budget; the diagnostic manufacturer gets the other half.

A 2021 article about the analytical performance of an Atellica CH 930 analyzer provides another convenient opportunity to test whether today's instruments can meet the new performance specifications:

Atellica CH 930 chemistry analyzer versus Cobas 6000 c501 and Architect ci4100 - a multi-analyte method comparison, Imola Gyorfi, Krisztina Pal, Ion Bogdan Manescu, Oana R. Porea, Minodora Dobreanu. Revista Romana de Medicina de Laborator, Vol. 29 Nr. 4, October 2021.

While the study evaluated 22 analytes, remember that Braga and Panteghini only published 13 performance specifications. Between those two lists, there were only 9 analytes in common. So we will evaluate if those 9 assays can meet the MU APS.

The Braga and Panteghini pU and the performance

The imprecision was estimated from over a month of daily QC data, not as ideal as mu calculations prefer. Bias was estimated by comparison study of the Atellica CH 930 and the Roche Cobas 6000 c501. Considering these are the major platforms from two major diagnostic manufacturers, it might have been assumed that these platforms are mostly the same, but the study showed otherwise. For some analytes, three levels of QC were recorded, but for other analytes, only two levels. Bias was calculated at multiple decision levels, for our purposes here, we will show the maximum bias.

| Measurand | Milan Model | APS for standard MU, % | APS for desirable lab CV% | Level 1 CV | Level 2 CV | Level 3 CV | Max |Bias| observed |

| total bilirubin | Biological Variation (2nd best) | 10.5% | 5.25% | 0.1% | 5.03% | 4.47% | 12.1% |

| creatinine | Biological Variation (2nd best) | 2.2% | 1.1% | 4.29% | 3.55% | -- | 0.7% |

| glucose | outcome-based (best) | 2.00% | 1.00% | 2.15% | 1.92% | 1.92% | 1.0% |

| sodium | Biological Variation (2nd best) | 0.27% | 0.14% | 0.71% | 0.57% | 0.57% | 1.7% |

| potassium | Biological Variation (2nd best) | 1.96% | 0.98% | 0.92% | 0.58% | 0.70% | 3.0% |

| total calcium | Biological Variation (2nd best) | 0.91% | 0.46% | 3.57% | 3.04% | 2.25% | 5.4% |

| urea | Biological Variation (2nd best) | 7.05% | 3.03% | 2.57% | 2.57% | -- | 11.4% |

| alanine aminotransferase | Biological Variation (2nd best) | 4.65% | 2.38% | 3.05% | 2.72% | -- | 12.4% |

Does pU Pass or Fail?

When the performance specification is applied to the imprecision measured on this instrument, what is the verdict? Note that the MU and pU are specifications that mostly ignore bias. Measurement Uncertainty can't be combined across all the levels if bias exists. So typically the approaches assume (1) either bias is so small (unspecified) that it can be ignored or (2) the bias varies over the long term, so it can be incorporated as just like another imprecision, or (3) the bias must be eliminated before any of the measurement uncertainty approaches can be applied. For our purposes here, we will pretend that either the bias is small (it isn't) or it can be corrected (who knows?).

| Measurand | Milan Model | APS for standard MU, % | APS for desirable lab CV% | Level 1 CV | Passes? | Level 2 CV | Passes? | Level 3 CV | Passes? |

| total bilirubin | Biological Variation (2nd best) | 10.5% | 5.25% | 0.1% | PASSES | 5.03% | PASSES | 4.47% | PASSES |

| creatinine | Biological Variation (2nd best) | 2.2% | 1.1% | 4.29% | FAILS | 3.55% | FAILS | -- | -- |

| glucose | outcome-based (best) | 2.00% | 1.00% | 2.15% | FAILS | 1.92% | FAILS | 1.92% | FAILS |

| sodium | Biological Variation (2nd best) | 0.27% | 0.14% | 0.71% | FAILS | 0.57% | FAILS | 0.57% | FAILS |

| potassium | Biological Variation (2nd best) | 1.96% | 0.98% | 0.92% | PASSES | 0.58% | PASSES | 0.70% | PASSES |

| total calcium | Biological Variation (2nd best) | 0.91% | 0.46% | 3.57% | FAILS | 3.04% | FAILS | 2.25% | FAILS |

| urea | Biological Variation (2nd best) | 7.05% | 3.03% | 2.57% | PASSES | 2.57% | PASSES | -- | -- |

| alanine aminotransferase | Biological Variation (2nd best) | 4.65% | 2.38% | 3.05% | FAILS | 2.72% | FAILS | -- | -- |

While the results here are not completely encouraging, the CH 930 actually fares better than many of the other instruments that we have analyzed. At the same time, it's important to note that the uncertainty/imprecision of creatinine, glucose, sodium, and total calcium is so large it eclipses the entire pu budget, not just the 50% that the laboratory is supposed to be alloted.

This points out - again - that there are powerful disconnects between the new maximum permissible measurement uncertainty performance specifications and the current performance of today's diagnostic instrumentation. The pU goals are laudable, but they don't look practically achievable. If we're to try to achieve analytical improvement, we have to mix the carrot with the stick. Highlight and approve of the best of what's being done today, while strongly encouraging even more improvement in the future.