Guest Essay

What are the Risks of Risk Management?

- Moving from Quality to Risk

- What are the approaches to Risk?

- How do we assess and mitigate Risk?

- What are the failure modes of FMEA?

- How do we know if we've done our Risk mitigation right?

- Are the risks of Risk Management acceptable?

- Is it worth mitigating the risks of 100-Year Storms?

- References

Sten Westgard, MS

November 2005

There's a new new thing in healthcare laboratory quality. It's Risk Management and it's coming to a regulation near you. In the wake of the EQC options 1 through 3 failure, a new option 4 is being prepared, and it's likely that it will include many elements of Risk Management, Assessment, and Mitigation.

The recent issue of Lab Medicine (October 2005) included a report from the CLSI “QC for the Future” conference in March of 2005. The main thrust of a plurality of the articles was to recommend the adoption of Risk Management principles to address the “Option 4” problem[1]. CLSI is going to follow up the 2005 “QC for the Future” conference with a 2006 conference on “Risk Management Tools for Improved Patient Safety.” It may be reasonable to conclude that the QC for the Future is going to be Risk Management.

Like most new new things in healthcare, Risk Management has a long history in other sectors, particularly finance and insurance. Risk management been applied with success and is ubiquitous in those industries. Now the business executives of healthcare are bringing these principles and practices into laboratory medicine. Indeed, the ISO standard, 14971: Medical Devices – Application of Risk Management to Medical Devices, is cited by many as a template which can be applied to the laboratory.

Moving from Quality to Risk

Put simply, Risk is the possibility of suffering harm. This, by itself, does not make risk bad. Everything we do involves some level of risk. Where we live, what we eat, how much we drive, what activities we do, all involve risk.

Risk is a part of every opportunity. Some opportunities are riskier than others, but if rewards are great enough, some judge the risk worthwhile. Entrepreneurs embrace risk, and the history of civilization is one of embracing and overcoming risk. By measuring, estimating, and planning for risk, we are able to pursue ever more opportunities.

Risk is omnipresent in healthcare. Every medical intervention carries with it the possibility that the patient will be harmed instead of healed. Side effects of drugs, complications of surgery, and of course medical mistakes, are the risks of medical care.

Risk Management is the art of figuring out the possible outcomes of your actions, and trying to plan for those outcomes, good and bad. If Quality asks "How do we do the right things right?", Risk Management asks a follow-up, "What can go wrong, and what can we do about it?"

What are the approaches to Risk?

Since we cannot live and cannot have healthcare without risk, the question becomes, How do we approach risk? As it turns out, there are a number of distinct attitudes toward risk:

Feasible Risk: Keep risks as low as reasonably practical. You can't make any process safer than is technologically and/or commercially possible.

Comparative Risk: Keep risks as low as other comparable risks. For instance, if the public accepts the risks of one activity, achieving that level of risk in another process is also acceptable. For instance, a risk question could be formulated like this: should healthcare be as safe as smoking, as safe as driving, or as safe as flying?

De minimis Risk: Reduce risk until it is trivial. In safety culture, this goal is set as a probability of 10-6 or better.

Zero Risk: No risk is acceptable. Safety doesn't exist while there is still a chance of an accident with harmful consequences. Zero risk doesn't actually exist, of course, but nevertheless it can be adopted as a goal, and it is an particularly apt goal for certain tasks. For instance, a zero risk approach for the handling of nuclear weapons and reactors is probably a necessary one.[2]

To this we can add the Hippocratic Oath, which contains the very core of a doctor's approach to risk: “First, Do No Harm.” Where the oath fits in the spectrum above may depend on your perspective. A patient may believe that the doctor acts closer to De minimis Risk, while a manufacturer may believe that Feasible Risk is the realistic approach.

It's also useful to note that no matter where the starting point is, the trend is to improve processes and practices to approach zero risk. With cars, for example, high accident and fatality rates were acceptable to the public decades ago, but gradually those dangers became unacceptable and the public began demanding better laws and safer cars. In the laboratory, when testing was first performed manually with pipettes, errors were much more frequent and it was tolerated. As instrumentation and automation have entered the lab, the tolerable rate of errors has continually shrunk.

How do we assess and mitigate risk?

Estimating risk has a long history and a set of tools was invented specifically for this purpose. The most common tool is known as Failure Modes and Effects Analysis, or FMEA. A universe of literature exists on FMEA and related tools like Fault-Tree Analysis and Failure Mode Effects and Criticality Analysis (FMECA). Often FMEA or FMECA is combined with Fault-Tree Analysis to provide both a listing and a visual display of the hazards and harms. For our purposes, we're going to do a crash course in FMEA, (please bear in mind, this will be a bit like explaining the Decline and Fall of the Roman Empire in four paragraphs).

In addition to describing a generic FMEA process, we will use an example specific to healthcare – HFMEATM – the Health Care Failure Mode Effects Analysis[3]. This is an actual Risk Management tool that has been put into use in the VA system in 2001.

- Create a map of the process, and use that to identify all hazards and harms that can occur at each step (a hazard is a potential source of harm, and hazards can result in multiple harms). We strive for a complete catalogue of all the possible hazards and harms. Typically this involves brainstorming by a team made up of the staff that actually performs the process.

- Next, we assign a frequency or probability to each harm – estimating the likelihood that that any given outcome will occur. In some cases, this number is a ranking from 1 to 10, with 1 being very rare, and 10 being frequent.

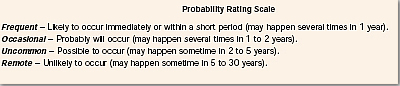

In the case of HFMEATM[3], this ranking isn't even a number, but is a general frequency (remote, uncommon, occasional, frequent):

This can also be referred to as Probability of Occurence (OCC)[4]:Score OCC 10Frequent ( ?10-3 ) 8Probable (<10-3 and ?10-4 ) 6Occasional (<10-4 and ?10-5 ) 4Remote (<10-5 and ?10-6 ) 2Improbable/theoretical (<10-6

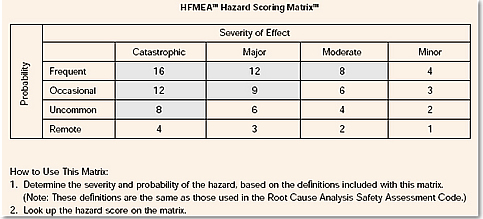

In still other cases, where actual performance is known, a probability figure can be used. - We assign a severity rating of the damage should each harm actually occur. Again, this rating usually is a ranking from 1 to 10, with 1 being trivial and 10 being catastrophic. In the case of HFMEATM[3], there is a choice of just four outcomes (catastrophic, major, moderate, and minor):

Here is a different severity rating [4]:

Score Severity of Harm (SEV) 10Catastrophic - patient death 8Critical - permanent impairment or life-threatening injury 6Serious - injury or impairment requiring medical intervention 4Minor - temporary injury or impairment not requiring medical attention 2Negligible - inconvenience or temporary discomfort

ISO 14971, which may be used as the source for a future clinical laboratory standard, does not specify which kind of ranking to use for Risk assessment. It accepts that there may be non-quantitative judgments, rankings of 1 to 5, or 1 to 10, as well as actual observed probabilities. - Combining the severity rating with the frequency rating, we create an expression of the risk associated with the hazard. (severity * frequency) = the Hazard Score or Criticality.

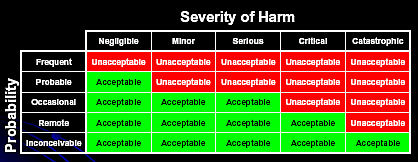

- Depending on the resulting “score”, we make choices on what we do. Is the risk acceptable? Do we need to reduce the risk? If we need to reduce the risk, what actions – or risk mitigations – will we take?

With HFMEATM[3], there is a table that gives a Hazard Score Matrix:

Another Risk Acceptability chart looks like this [4]:

At the end of this process, we evaluate our Hazard Scores and make decisions on risk mitigation. Because of the numerous failure modes and the limited resources, it is generally suggested that we concentrate on the highest risks (i.e. Highest Hazard Scores) in a Pareto style comparison. That is, concentrate on mitigating the risks of the most frequently occurring errors, and the most catastrophic consequences. For HFMEATM, that score is 8 or higher. The unstated implication is that we will accept, dismiss, ignore, or not have the resources to mitigate those risks with the lowest Hazard Scores.

6. For mitigated risks, we calculate a new Criticality value for risk again. And we try to determine if any new hazards or harms have been created while we were mitigating the earlier hazard. We repeat this step until all hazards have been mitigated so that the risk scores are in the acceptable range.

This is a simple model of Risk Management. In other cases, the model is expanded to include estimated probabilities of detection and recovery. For instance, if there is a mechanism to detect an error when it occurs, we estimate the probability that the detection will succeed. Then, if a recovery mechanism exists, we also estimate the probability of recovering from a failure if it is detected. When detection and recovery are included, then we calculate the final risk score using those probabilities (probability x severity x detection & recovery). The resulting risk score is then known as a Risk Priority Number or RPN.

What are the Failure modes of FMEA?

FMEA has its place and there are numerous cases where it is appropriate for application. In healthcare services, it can provide extra insight into the sources of possible failure in a process. FMEA is particularly helpful in medical devices, where component failures and internal mechanisms can be predicted with some confidence (and where there is a finite number of components to consider). But its success in one field does not guarantee that its application in the laboratory or in other areas of healthcare will be helpful.

Here are some of the potential problems we see with risk mitigation techniques.

1. Model Error and Risk Ignorance.

We are not omniscient and often we lack the imagination for failure. Particularly in tightly coupled, highly complex systems, we simply cannot understand all the interactions, dependencies, and error modes of the technology and human factors. Inevitably, we will not be able to describe all possible failure modes. For healthcare, the failure modes are unfortunately almost unlimited.

Since human factors dominate healthcare, there is a real danger we are not seeing the “hidden factory” that exists when we brainstorm to map the process and identify the hazards.

2. We are all Misunderestimators.

For some failure modes that we can imagine, we are not able to accurately describe the probability of their occurence. We are used to a normal distribution mode, where extreme events occur extremely rarely. Unfortunately, we actually live in a “fat tails” environment, where extreme events occur far more often than we might predict.

“In effect, the assumption of normality will result in a serious underestimate of the frequency of major losses. 'Hundred-year storms' will occur much more frequently than once every hundred years.” [5]

For example, a colossal error in estimation occurred in the failure of the Long Term Capital Management, a gigantic hedge fund whose collapse nearly dragged down the major banks of the US. This hedge fund was run by Nobel-prize winning economists whose intricate mathematical models led them to believe they could trade without risk. As they lost first tens and later hundreds of millions of dollars every month in 1998, their models assured them that this failure was so unlikely that it was “an event so freakish as to be unlikely to occur even once over the entire life of the Universe and even over numerous repetitions of the Universe.” [6] Nevertheless, between April and October of 1998, Long Term Capital managed to lose 5 billion dollars, and required a government-arranged, privately-funded bail out.

As a matter of Social Science, humans are exceedingly poor at estimating probabilities, particularly about the frequency or severity of remote events. Our estimates are affected by the availability heuristic, or mental shortcut, which gives undue weight to some types of information (generally vivid) over other “mundane” information. For example, with mortality, people tend to overestimate the probabilities for dramatic causes of death (homicide, terrorism, etc.) over the far more frequent but less vivid causes of death (diabetes, stroke, etc.).[7] Because of the mundane nature of systematic analytical errors, it is unlikely we can properly estimate the frequency.

3. Consequence Myopia.

For other failure modes that we can imagine, we are also unable to accurately gauge the severity of their occurrence. We may believe the failure of a small part of the process will simply not effect the ultimate result.

When we look at the Challenger and Columbia disasters, we see that the engineers of NASA walked into those disasters with their eyes wide open – they saw previous evidence of O-ring failures and foam strikes but tragically underestimated the consequences if such events would occur again on a larger scale. With the Columbia, they even assured the shuttle astronauts to the very end that the foam strike wasn't a danger. This represents the most dramatic failure of risk assessment.

It's a common assumption that any lab errors are insignificant, because "It is reasonable to assume that improbable results would be challenged and implausible results disregarded by physicians."[4]. But the fact is that we don't really know the impact of laboratory errors, because the interpretation by physicians is so variable. A recent NIST study found that a potential bias of between 0.1 to 0.5 mg/dL on up to 15% of serum calcium results, a difference which would not generally be noticed by a physician, nevertheless this difference had the national potential of affecting patient care and costing between $60 to $199 million annually.[8]

Another problem is how to measure severity. In traditional risk analysis (outside healthcare), the severity is often correlated with the expense of the repair, i.e. the failure of an expensive part is rated as more severe than the failure of an inexpensive part. However, any risk to a patient must be valued higher than the cost of a part. The problem here is that cost and safety are being reduced to a single variable.

4. Rotten Data

Sometimes our methods and metrics fail us. Even where we may have some actual data on error rates, the usefulness of the data may be compromised. For instance, if we know that the common behavior in laboratories is to follow manufacturer's wide limits, or to use other rationalizations to set limits as wide as possible, then the resulting error rates reported are actually artificially low. Our knowledge of analytical error rates is not accurate, because it is not measuring the rejections based on medically significant limits. Yet the fact that we have one or two studies suggesting low analytical error rates may now be used as evidence in other laboratories that it's okay to downgrade that risk factor.

Similarly, our knowledge of catastrophes may be hampered by the fact that there is no reporting incentive. Whenever a near-miss event occurs, there are many incentives to hide the problem (fear of legal action, fear of being blamed, fear of being viewed as , but few incentives to broadcast it through the organization. Since we don't encourage whistle-blowers, and often punish whistle-blowers and single out people as “bad apples” instead if looking for system problems, there is little incentive for people to report problems. Again, this may result in an artificially low probability, which further blinds us to the actual risk.

5. Garbage In , Garbage Out

“Even though they can be calculated precisely, [probabilistic risk assessment] PRA results are not exact because, in most cases, the input data have inherent uncertainties and the analysts results are based on explicit assumptions.” [9]

Since many of the measures of the FMEA are based on our subjective judgments, or our judgments on the relative probability or severity of errors, rather than observed performance in our laboratory, the RPNs will generally only reinforce our assumptions, rather than reveal our blindspots. Even when experts are consulted, the experts can have tunnel vision and may wish to reach a pre-ordained conclusion. In the worst case scenario, the RPNs provide a patina of scientific evidence to our underlying biases – they justify what we wanted to do in the first place.

Finally, as we've seen above, since there is no standard for scale (either 1 to 5, 1 to 10, or the semi-quantitative categories of the HFMEA), and no standard by which to judge the final risk score, it will be difficult to compare risk assessments among instruments and laboratory. The choice of scale may in fact determine the outcome of the exercise (i.e. Choosing a 1 to 5 scale over the 1 to 10 scale may change an unacceptable risk into an acceptable one). This lack of standardization may result in situations where, even with identical performance, one laboratory's acceptable risk is another laboratory's unacceptable risk, simply because one chooses a different scale than the other.

6. Confusion of probability with certainty

Just because an event is rare doesn't mean that it won't happen. Where the size of the risk is large, the probability is less relevant, because some outcomes are too simply costly to bear. If we know that one failure mode is a patient death, the probability of that occurring is less relevant. We simply do not want to accept risks – even rare or very rare hazards – where death is the outcome.

7. Ineffective Risk Mitigation

Our model may reveal failure modes, but our efforts to mitigate those risks may (1) be ineffective, and (2) cause other failure modes that we either don't recognize or don't mitigate effectively. If the model stands on shaky ground in the first stage, the epicycles that follow will only get shakier. We may believe that we have mitigated risks, when in fact we may only be deluding ourselves.

In conclusion, if we were to chart and score these hazards, our table might look like this:

| Failure Mode | Potential Causes | Severity | Probability | Hazard Score |

| Risk Assessment Error | Model Error / Risk Ignorance |

8

|

8

|

64

|

| Misunderestimation |

6

|

8

|

48

|

|

| Consequence Myopia |

8

|

6

|

48

|

|

| Rotten Data |

6

|

6

|

36

|

|

| Garbage In, Garbage out |

4

|

8

|

32

|

|

| Confusion of Probability with Certainty |

3

|

6

|

18

|

|

| Ineffective Risk Mitigation |

2

|

6

|

12

|

Then again, those numbers were based on my flawed judgments, so perhaps a more realistic chart would look like this:

| Failure Mode | Potential Causes | Severity | Probability | Hazard Score |

| Risk Assessment Error | Model Error / Risk Ignorance |

unknown

|

unknown

|

unknown

|

| Misunderestimation |

unknown

|

unknown

|

unknown

|

|

| Consequence Myopia |

unknown

|

unknown

|

unknown

|

|

| Rotten Data |

unknown

|

unknown

|

unknown

|

|

| Garbage In, Garbage out |

unknown

|

unknown

|

unknown

|

|

| Confusion of Probability with Certainty |

unknown

|

unknown

|

unknown

|

|

| Ineffective Risk Mitigation |

unknown

|

unknown

|

unknown

|

Which table is correct? Do you trust my judgment? Will you trust the judgment of the manufacturer when they construct a table like this for your new instrument?

How do we know if we've done our risk mitigation right?

“There is no easy way to measure the quality of a FMEA because FMEA quality is, in essence, an assessment of a model....the actual frequency of events after mitigation can be observed; however, as mentioned, catastrophic events will be extremely unlikely, regardless of the quality of the mitigation.”[10]

Here's where quality control comes into the Risk Management and Assessment system. QC gives real data on the performance of the actual process. This in turn can provide better FMEA ratings and scores. QC and its associated metrics give Real-World data to the RM system.

From the Risk Management perspective, QC is a detection mechanism; you use QC to detect hazards and thus catch them before they harm a patient. Thus, in some ways, your Risk Assessment could produce a recommendation for running more controls or even running QC more frequently. But that assumes that the Hazard Score, Criticality or RPN is “high” enough that you pay attention to it.

Are the risks of Risk Management acceptable?

Risk Management performs a great service when it uncovers hidden or new hazards and facilitates the mitigation of those risks. The biggest danger is that Risk Management can be manipulated so that instead of uncovering, managing, or mitigating risks, the Risk Management process enables people to accept risks that should be unacceptable. If there are risks we currently consider unacceptable in laboratory medicine, but through Risk Management, they suddenly become acceptable, that transformation should be very carefully scrutinized.

Is it worth migitating the risks of 100-Year storms?

In many traditional risk assessments, particularly business and financial processes, there is little incentive to mitigate the risk of the '100-year storm.' In the case of a financial disaster or “black swan” event, a lot of money gets lost, and maybe the business goes into bankruptcy, but people don't get physically hurt. That type of risk management doesn't translate well to healthcare.

If mitigation and quality efforts are going to be decided on the basis of FMEA and RPNs, organizations are going to be tempted to ignore the “small risks”, either by self-delusion or by the illusion of precision that RPNs provide. The result of that is that we are embracing a New Orleans-size risk. We are hoping that we get lucky and that any Katrina-level event happens on someone else's watch.

The New Orleans approach to risk was to build levees to withstand category 3 hurricanes, but not category 4 or 5. Congress did not authorize funds to prepare for a category 4 or 5 hurricane. Even then, funds for the maintenance and improvement of the levee system were cut due to budget priorities of the federal government. There are mounds of reports, memos, and warnings to officials at all levels of the government pleading for better preparedness. But these calls went unanswered. Katrina is an illustration of the "Leaky Roof" syndrome. As long as it doesn't rain, there's no need (and no motivation) to fix your leaky roof. But as soon as it starts raining, it's too late to fix the roof. And if you haven't been checking your roof, you might not even realize the leak until it's too late...

In the Netherlands, they took a different approach to Risk Management for a 100- Year flood. In 1953, a flood killed nearly 2,000 people when a violent storm broke dikes and seawalls. Afterward, the Dutch vowed that this would never happen again.

For the next twenty-five years, the Netherlands spent nearly 8 billion dollars to construct “a system of coastal defenses that is admired around the world as one of the best barriers against the sea's fury – one that could withstand the kind of storm that happens only once in 10,000 years.” [11]

It costs nearly $500 million each year to maintain the system known as Delta Works, but this is viewed as an insurance, and an acceptable cost when measured against the Dutch gross national product. With justification, the Dutch can proudly say, “God made the world, but the Dutch made Holland.”

Sadly, it turns out that by the time Katrina hit New Orleans, it wasn't a Category 3 hurricane anymore. New Orleans actually suffered three disasters: first, the natural disaster of Katrina, followed later by a disaster in the federal emergency response, but much earlier in the timeline, it suffered a system failure in the design of the levees:

“Investigators in recent days have assembled evidence implicating design flaws in the failures of two floodwalls near Lake Pontchartrain that collapsed when weakened soils beneath them became saturated and began to slide. They have also confirmed that a little-used navigation canal helped amplify and intensify Katrina's initial surge, contributing to a third floodwall collapse on the east side of town. The walls and navigation canal were built by the US Army Corps of Engineers, the agency responsible for defending the city against hurricane-related flooding.”[12]

Here is a classic failure of Risk Management. We underestimated the danger. We refused to plan for it adequately. Even when we faced a danger that we thought we had prepared for, the risk mitigation was not implemented correctly, so it failed.

Let's hope we don't make the same mistakes in healthcare.

References

- “Technology Variations: Strategies for Assuring Quality Results”, Fred D. Lasky, PhD, Lab Medicine, October 2005, Volume 36, Number 10, pp.617-620; “Laboratory Quality Control Requirements Should be Based on Risk Management Principles”, Donald M. Powers, PhD, Lab Medicine, October 2005, Volume 36, Number 10, pp.633-638; “QC for the Future: CLSI Standard Development and Option 4 Proposal”, Lab Medicine, Luann Ochs, MS, October 2005, Volume 36, Number 10, pp.639-640.

- J. Reason, Managing the Risks of Organizational Accidents, Ashgate Publishing Limited, Aldershot, United Kingdom, 1997, pp.175-176.

- Joseph DeRosier, PE, CSP; Erik Stalhandske, MPP, MHSA; James P. Bagian, MD, PE; Tina Nudell, MS: “Using Health Care Failure Mode and Effect Analysis™: The VA National Center for Patient Safety’s Prospective Risk Analysis System.” The Joint Commission Journal on Quality Improvement Volume 27 Number 5:248-267, 2002.

- Donald M. Powers, PhD, “Laboratory Quality Control Requirements Should be Based on Risk Management Principles”, Lab Medicine, October 2005, Volume 36, Number 10, p.636.

- Ralph C. Kimball, “Failures in Risk Management”, New England Economic Review, January/February 2000, p.6.

- Roger Lowenstein, When Genius Failed. Random House, New York, 2000, p. 159.

- Barry Schwartz, The Paradox of Choice, HarperCollins, New York, 2000, pp.57-61.

- The Impact of Calibration Error on Medical Decision Making, http://www.nist.gov/director/prog-ofc/report04-1.pdf

- J. Wreathall and C Nemeth, "Assessing risk: the role of probabilistic risk assessment (PRA) in patient safety improvement", Qual. Saf. Health Care 2004; 13;206-212.

- Jan S. Krouwer, PhD, FACB, “An improved Failure Mode Effects Analysis for Hospitals” Arch Pathol Lab Med Vol. 128, June 2004.

- William J. Broad, “In Europe, High-Tech Flood Control, With Nature's Help”, New York Times, September 6, 2005.

- Joby Warrick, Michael Grunwald, "Investigators Link Levee Failures to Design Flaws", Washington Post, October 24, 2005, A01.